The script could probably be expanded to do this on its own using something like P圜HM, but for now, you have to do this manually. There are lots of tools that can do this. You should get a folder that looks something like this:

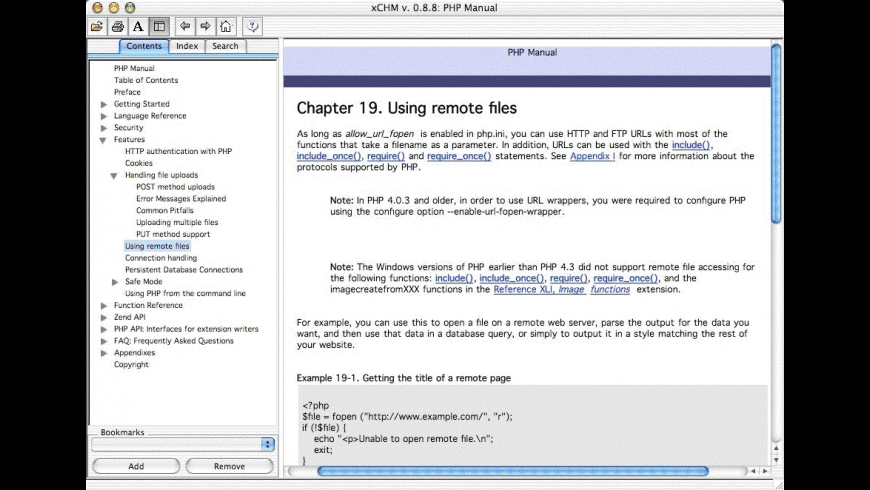

XCODE CHM READER CODE

Before we proceed to build the demo app, however, it’s important to understand that any barcode scanning in iOS, including QR code scanning, is totally based on video capture. The decoded information can be accessed by using the stringValue property of an AVMetadataMachineReadableCode object. The demo app that we’re going to build is fairly simple and straightforward. Lastly, we decode the QR code into human-readable information. From that, we can find the bounds of the QR code for constructing the green box. By calling the transformedMetadataObject(for:) method of viewPreviewLayer, the metadata object’s visual properties are converted to layer coordinates. These couple lines of code are used to set up the green box for highlighting the QR code.

If that’s the case, we’ll proceed to find the bounds of the QR code. If a metadata object is found, we check to see if it is a QR code. Otherwise, we reset the size of qrCodeFrameView to zero and set messageLabel to its default message. The very first thing we need to do is make sure that this array is not nil, and it contains at least one object. metadataObjects) of the method is an array object, which contains all the metadata objects that have been read. Insert the code below in the do-catch block: The preview layer is added as a sublayer of the current view. You use this preview layer in conjunction with an AV capture session to display video. This can be done using an AVCaptureVideoPreviewLayer, which actually is a CALayer. Now that we have set and configured an AVCaptureMetadataOutput object, we need to display the video captured by the device’s camera on screen. The metadataObjectTypes property is also quite important as this is the point where we tell the app what kind of metadata we are interested in.

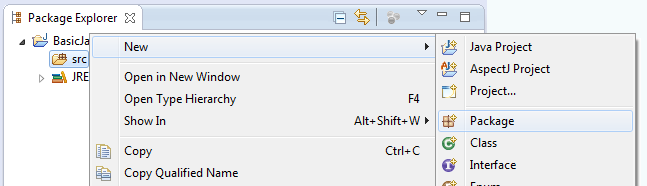

XCODE CHM READER SERIAL

So, we use DispatchQueue.main to get the default serial queue. According to Apple’s documentation, the queue must be a serial queue. A dispatch queue can be either serial or concurrent. In the above code, we specify the dispatch queue on which to execute the delegate’s methods. When new metadata objects are captured, they are forwarded to the delegate object for further processing. For now, continue to add the following lines of code in the do block of the viewDidLoad method:ĬaptureMetadataOutput. This class, in combination with the AVCaptureMetadataOutputObjectsDelegate protocol, is used to intercept any metadata found in the input device (the QR code captured by the device’s camera) and translate it to a human-readable format.ĭon’t worry if something sounds weird or if you don’t totally understand it right now – everything will become clear in a while. The AVCaptureMetaDataOutput class is the core part of QR code reading. In this case, the output of the session is set to an AVCaptureMetaDataOutput object. The AVCaptureSession object is used to coordinate the flow of data from the video input device to our output. To perform a real-time capture, we use the AVCaptureSession object and add the input of the video capture device. In the code above, we specify to retrieve the device that supports the media type.

Assuming you’ve read the previous chapter, you should know that the AVCaptureDevice.DiscoverySession class is designed to find all available capture devices matching a specific device type.

0 kommentar(er)

0 kommentar(er)